Are you struggling to understand what AI agents actually are? Wondering how they differ from chatbots or AI assistants, and whether they can truly transform your business operations?

In this article, you'll discover a proven process for building AI agents that work in the real world, from initial workflow analysis to deployment and quality control.

How Are AI Agents Different From AI Assistants?

One of the most significant misconceptions about AI agents is that they're essentially the same as chatbots or AI assistants. In reality, these represent distinctly different categories of AI tools, and understanding the difference is crucial for anyone looking to implement them effectively.

The key distinction comes down to one word: agency. This is about autonomy and the ability to act independently within defined parameters.

When you interact with ChatGPT to write a blog article, you're working with an AI assistant. You send an idea to the system, and it responds. You provide feedback, and the AI iterates, and this cycle continues. AI assistants like ChatGPT feel magical and are genuinely useful tools. However, nothing happens unless you're actively driving the process. You remain in the driver's seat at every stage. If you stop prompting, the work stops.

AI agents work fundamentally differently. These are AI tools with autonomy. They have the ability to reason about a situation, plan a course of action, and execute tasks on your behalf without requiring constant human input at every step. They read their environment, understand the task at hand, have access to various tools and decision-making processes, and can actually complete work independently.

When you have multiple AI agents working together in what's called an agentic workflow, you're essentially creating an AI team. These team members can collaborate with each other to accomplish complex tasks autonomously, similar to how a human team might divide work, coordinate efforts, and produce results without requiring management oversight of every single action.

Autonomy is what makes AI agents powerful, but it's also what makes them more complex to build and deploy properly. You're not just creating a tool; you'll supervise closely. You're creating something you'll trust to act on your behalf, which requires a completely different approach to development and implementation.

What Are the Benefits of Properly Implemented AI Agents?

When AI agents are built correctly and deployed thoughtfully, they unlock capabilities that fundamentally change what's possible for a business. Sara Davison, an agentic AI practitioner, acknowledges that many people advise against anthropomorphizing AI, but she finds the analogy to human teams genuinely useful because the parallels are so strong.

The primary benefit is the ability to scale almost infinitely with tools that function like team members or entire departments. These agents can perform work in the way you specifically want it done, following your processes and embodying your expertise. This isn't just about automating tasks. It's about scaling your unique approach.

This leads to perhaps the most significant strategic advantage: the ability to codify what Sara calls the secret sauce. In a world where everyone has access to ChatGPT, Claude, and other large language models, competitive differentiation becomes more challenging. If everybody can access the same powerful AI tools, what creates a moat around your business?

The answer lies in customization. Through the agentic framework, businesses can build a layer around their operations that captures what makes their processes, workflows, and approach genuinely unique. This might be how they analyze customer problems, how they develop solutions, how they communicate with clients, or any number of other distinguishing factors.

By capturing this secret sauce in agentic systems, companies create something their competitors cannot simply copy, even if those competitors have access to the same underlying AI models. This becomes intellectual property that provides a genuine competitive advantage.

The 4-Step, Evolutionary Framework to Build an AI Agent

Together with co-founder Tyler, Sara has established a systematic methodology for developing AI agents that perform reliably in production environments.

AI Is No Longer Optional for Marketers—Ready to Master It?

Join over a thousand forward-thinking marketers at AI Business World—a conference-in-a-conference at Social Media Marketing World.

Get two days of practical AI training where you'll discover:

✅ Systems that 3x your output—leaving time for strategy and creativity

✅ Proven strategies you can deploy right away—no guesswork, no wasted budget

Become the indispensable AI expert your company needs.

GET YOUR TICKETS—SAVE $370Sara is adamant that businesses shouldn't rush to give AI agents full autonomy over sensitive information or critical processes immediately. Many concerns about AI agents going wrong stem from organizations that skipped crucial steps in building trust and reliability before granting autonomy.

The philosophy Sara and Tyler have developed through their work is what they call evolution, not revolution. This means taking a gradual, measured approach to implementing AI agents. You don't hand an intern the keys to the castle on their first day. There's a process of building trust and reliability before delegating significant autonomy. The same principle applies to AI agents.

Just because tools like AgentKit from OpenAI make it incredibly accessible to build an agent doesn't mean those agents will work properly in real-world applications. The tools are easy to use, but the methodology behind building agents that behave correctly requires substantial work and expertise. The accessibility of agent-building tools has created a dangerous gap between the ease of creating an agent and the difficulty of creating one that actually works reliably.

This is why the evolutionary approach matters so much. Businesses need to gradually provide autonomy as they verify that their agents perform reliably, meet quality standards, and handle edge cases appropriately. This methodical process protects businesses from the very real risks of deploying autonomous systems prematurely.

#1: The Essential First Step: Workflow and DocGen

When a client approaches Sara and Tyler wanting to build an AI agent for a specific process, the first thing they do might seem counterintuitive: they don't touch any AI agent tools at all.

The foundation of successful AI agent implementation is developing a deep understanding of the workflow as it currently exists. Without this understanding, you're essentially just dragging and dropping nodes in a tool and hoping for the best. That approach doesn't capture what actually happens in real business environments.

The discovery process involves going deep to really understand the workflow end-to-end. This means identifying who is involved in the process and why those particular people are involved. It means understanding what each person's job actually entails, not just what their job description says, but what they actually do day to day. It means learning what happens when the process breaks down and how people currently work around those breakdowns.

The goal is to identify gaps and opportunities while capturing what makes certain team members particularly excellent at their roles.

A workflow in this context might be a process that a team follows from start to finish. This could include putting together a marketing strategy where several people are involved, a customer service workflow within a particular department, or creating product requirement documents. Essentially, any process where there's a flow to how something gets done qualifies.

Sara emphasizes that most people stop at the standard operating procedure level, and this is where most AI agent implementations produce bland, unsatisfying results. The SOP doesn't capture why Sue is exceptional at her job or why Tom can intuitively look at an application and make accurate gut-check decisions. People with deep domain expertise often don't even realize they're doing something specific that makes everything work. But they are, and capturing that tacit knowledge is essential.

Through their work, Sara and Tyler have identified different layers of what they call work intelligence. There's the SOP or standard layer, which represents the idealized version of how everything should happen in a perfect world. Underneath that sits the operational reality, which reflects what actually happens when processes meet real-world conditions.

Often, there are band-aids across the situation where people intuitively know how to fix things, but these workarounds aren't documented in any SOP. They happen in Slack messages or quick conversations. People solve problems and move on without ever formalizing the solution.

Then there's the contextual intelligence layer. This is about understanding what makes Sue instinctively know exactly what to do end to end. Defining and understanding this contextual intelligence allows the team to capture Sue's magic when they build out the AI agent system. The goal is to preserve the domain expertise that she has acquired through years of experience and enable her to do her work in a way that meets her own high expectations, but at scale.

Sara shares a detailed example to illustrate how this works in practice. Document generation, which they call DocGen, has become such a common request that it's now a category they specifically teach. Docgen refers to using an agentic team to collapse what would normally take weeks of work from top domain experts into a much shorter timeframe.

Consider creating a brand strategy document, a tech roadmap, or a marketing plan. These typically involve extensive consultation from various experts, often requiring weeks to synthesize information from multiple sources, conduct research, interview stakeholders, and ultimately produce a comprehensive strategic document. This process depends heavily on unstructured data including interviews, emails, research from the internet, and various other information sources.

These DocGen processes are inherently manual and time-consuming even without AI. You have your best people working on them, which means you can't scale beyond their availability and capacity.

Through agentic DocGen systems, businesses can take all that unstructured data and feed it into an agentic workflow where a team of expert agents, functioning almost like a specialized department structured similarly to how a human team would be organized, works together to complete the task. This can collapse timeframes dramatically, from something like eleven weeks down to eleven minutes, while still delivering the deep domain expertise you would get from your best human team members.

Tyler's family business provided a real example that Sara uses in their advanced course. The family runs a greenhouse supplies business that manufactures and sells greenhouse equipment. Part of their market consists of market gardeners, people who want to start small farm businesses to sell at farmers' markets or to cooperatives. These aren't massive agricultural operations, just small commercial growing ventures.

Tyler's father, Roger, possesses deep domain expertise in market gardening. He understands incredibly specific details like soil pH levels in Virginia versus Los Angeles, what crops grow best in different climates, and how to structure a viable small farm business. Roger used to provide individual coaching to people wanting to start these businesses, but individual coaching doesn't scale.

The DocGen workflow they built addresses this limitation. The challenge was taking Roger and his team's expertise, which would normally require extensive one-on-one consultation to develop a customized business plan for each prospective market gardener, and scaling it through an agentic system. In reality, writing a comprehensive market garden business plan might take several weeks of work from multiple domain experts. Their competitors actually charge six figures for such plans.

The agentic workflow they developed allows Roger to have a ninety-minute consultation call with someone who wants to start a market garden business. That transcript, along with perhaps some additional notes, gets fed into the agentic system as unstructured data. The system then generates a highly specialized thirty-five-page document customized to that person's specific location, budget, branding preferences, and all the other variables that go into a viable market garden plan.

This represents genuine scaling of expertise. The business is no longer constrained by Roger's availability or how many people he can hire and train to his level of knowledge. The business has essentially captured Roger's expertise and that of his team in a way that can serve unlimited clients while maintaining the quality and depth that previously required intensive personal consultation.

#2: The Mock-up: Create a Minimum Viable AI Agent

Once Sara and Tyler thoroughly understand a workflow through their discovery process, they move into creating what they call a mockup. This show-up-with-a-mock-up approach has fundamentally changed how they work with clients and how they teach others to build AI agents.

The challenge many clients face is that AI agents remain somewhat abstract. People understand AI is important, but they struggle to grasp why it matters specifically to their business or how it would actually work in their environment. Showing up with a working mockup solves this problem immediately. Because the available tools have become so accessible, creating a mini MVP to demonstrate concepts is now relatively straightforward.

After completing their discovery work, Sara and Tyler can quickly pull together a simple version of what the final system might look like. They give this to the client with instructions to test it and provide feedback about what works, what doesn't, and what's missing. This hands-on experience becomes the foundation for meaningful feedback.

The mockup reveals crucial information. Does the workflow need more agents because additional specialists need to be involved? Or is the system overcomplicated and trying to do too much? What happens when processes break in ways that weren't anticipated during discovery? Often, testing reveals gaps in the process that weren't apparent during the interview phase.

This creates a circular feedback loop. The mockup generates insights that inform how to improve the agents. The improved agents get tested again, revealing new refinements. This iteration continues until the system reliably handles the real workflow with all its complexity and edge cases.

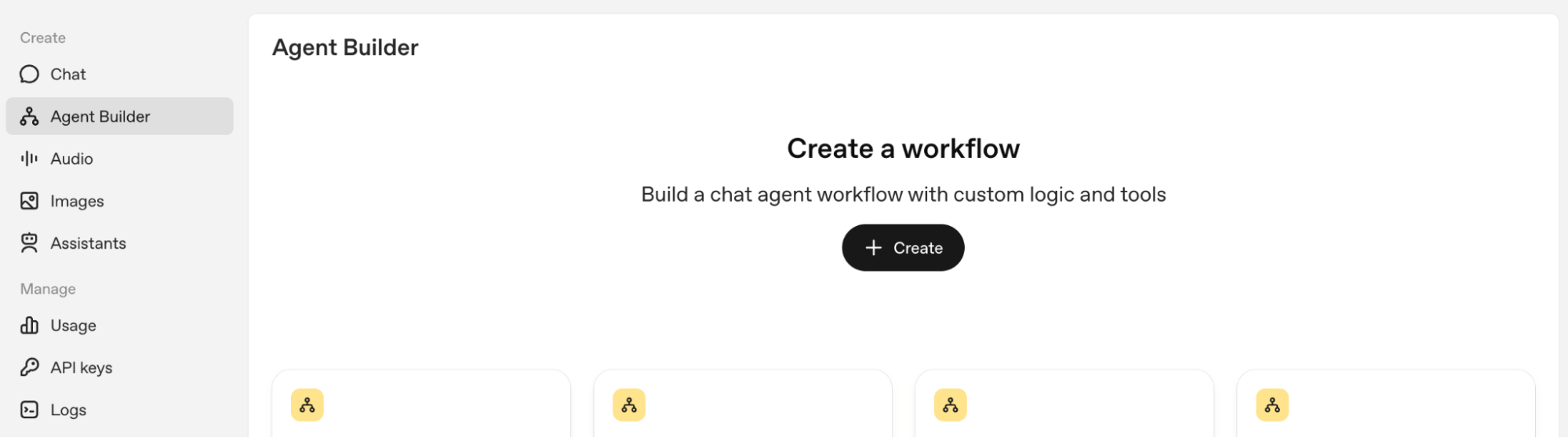

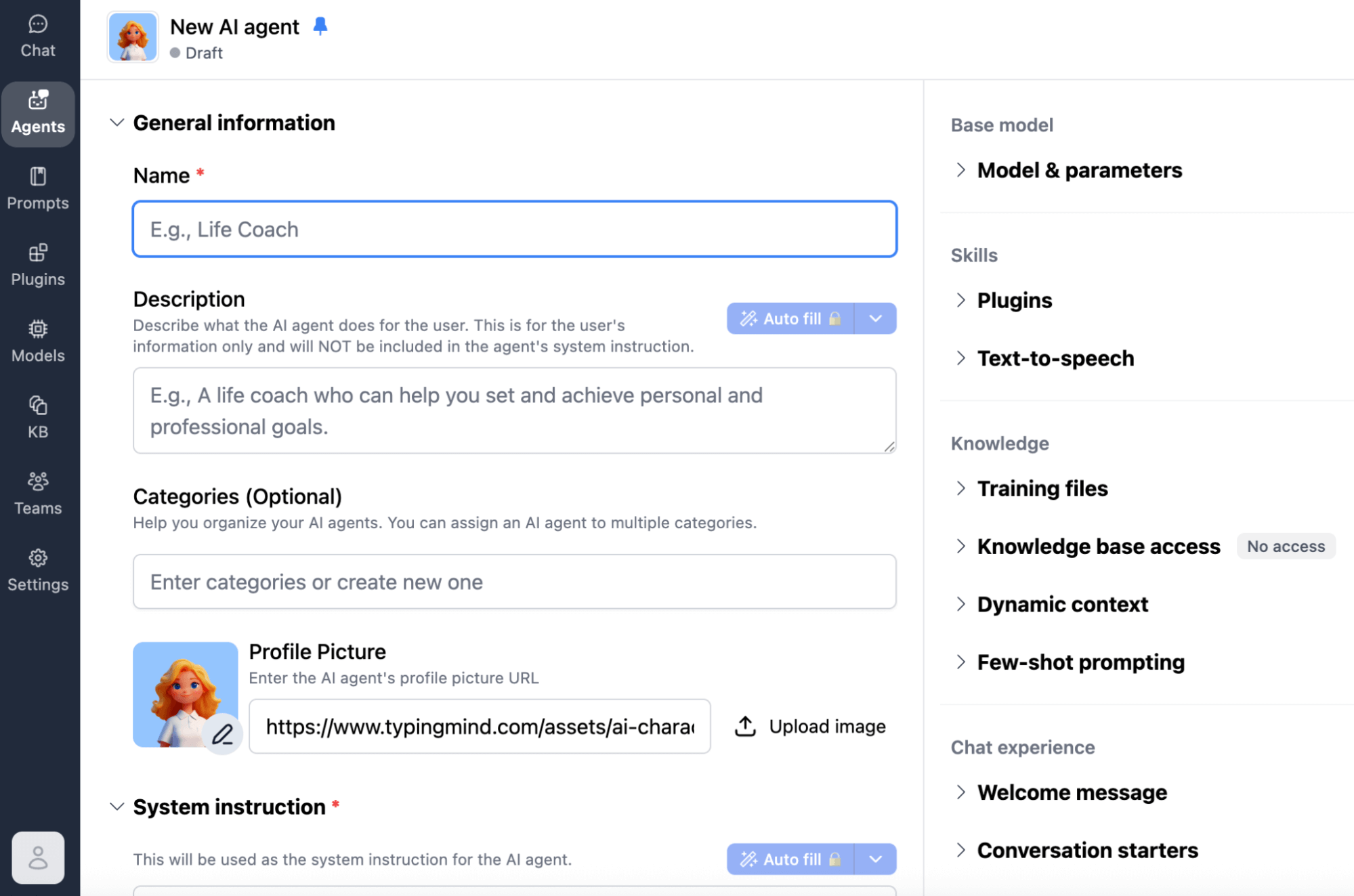

For their day-to-day mockup testing, Sara and Tyler have found certain tools particularly useful. They use a tool called TypingMind, which functions as a centralized playground for multiple large language models. It connects all the major AI providers like OpenAI and Anthropic in one interface, making it easy to build and test agents quickly using model context protocols. This allows rapid experimentation without committing to any particular platform or provider.

They also work extensively in a tool called Cassidy AI, which they specifically teach in their courses because it balances accessibility with power. Someone without a technical background can learn to build functional agents in Cassidy, while the platform still provides enough capability for sophisticated implementations.

Ready to Supercharge Your Marketing Strategy?

Get expert training and an unbeatable conference experience when you attend Social Media Marketing World—from your friends at Social Media Examiner.

Broaden your reach, skyrocket your engagement, and grow your sales. Become the marketing hero your company or clients need!

🔥 Save $900 on an All-Access ticket. Sale Ends Friday! 🔥

GET THE DETAILSHowever, Sara emphasizes a critical principle that guides all their work: remain large language model, tool, and platform agnostic. The AI landscape is moving incredibly fast. A business that commits its entire infrastructure and development costs to relying on one tool or platform creates dangerous fragility. When that tool changes, gets discontinued, or gets superseded by something better, the business faces a crisis.

Their systems are designed to allow easy switching between large language models. If OpenAI releases a new version, they can integrate it into their builds and their client builds without rebuilding everything from scratch. This flexibility protects against obsolescence and allows businesses to take advantage of improvements as they emerge.

The tools mentioned, TypingMind and Cassidy AI, function as interfaces that allow developers to choose the underlying large language model on the backend, whether that's Google Gemini, Claude, ChatGPT, or others. They also enable quick mockups that people can interface with directly, without needing their own accounts with specific AI providers. This makes testing with actual users straightforward and removes technical barriers.

Sara stresses that success with AI agents isn't really about the tools themselves. The tools are just plumbing, the infrastructure that makes things work more easily. The real determinant of success is the mental models, frameworks, and approaches you understand and apply. You could use N8N, Make, Copy, or numerous other tools to build functional agentic systems. What matters is understanding the operational workflow and procedures required to make agents work reliably.

Tools like N8N are extremely technical and confusing for many people, even though they're widely used by developers. But Make can accomplish virtually the same things with a somewhat friendlier interface. Having access to these tools means nothing if you don't know what you need them to do. That's where understanding methodology becomes absolutely critical. You need awareness not just of how to drag and drop to build an agent, but of what it actually takes to build AI agents that function properly in real business environments.

#3: Evolve AI Assistants to AI Agents and Deploy AI Agent Teams

After creating and testing mockups, the next phase involves actually building the workflow and evolving the system from assistants into true agents. This isn't a simple switch that you flip. It's a spectrum of gradually increasing autonomy that you provide to your agents throughout an iterative process.

The transformation is characterized by several key elements that need to be built and configured properly. First, you want to give your agent what Sara anthropomorphically calls a brain. This is the central source of intelligence, which is the large language model. The model provides baseline intelligence, but that's just the starting point.

Next, you need to give the agent memory and context. This involves providing access to information that contextualizes the agent to your specific business, your unique processes, your SOPs, and all the layers of knowledge that sit on top of the base model. The large language model knows how to reason and communicate, but it doesn't know anything about your company's approach or methods. Providing this context is essential.

The agent needs the capability for multi-step planning. It must exist in an environment where it can receive an input, generate an output, and go through a reasoning process that might involve multiple steps and decisions. This is what enables the agent to actually plan and execute rather than just respond.

As systems become more sophisticated, agents need access to other team members. They need the ability to delegate work among themselves. When you get into advanced multi-agent workflows, something interesting happens. You suddenly realize that these team members need management. With too many agents working without coordination, chaos emerges. This leads to developing what Sara calls an orchestrator agent, essentially a program manager that divvies up tasks among the team, maintains quality control, and ensures work meets standards.

Different types of agents serve different functions within these systems. Domain expert agents bring specialized knowledge to particular aspects of the work. Quality assurance agents specifically check the quality of outputs before they proceed further in the workflow. A copywriting agent might review and refine the actual writing of a document. In the DocGen process Sara described earlier, different agents handle different specialized aspects of creating the final strategic document.

Finally, you want to give agents tools that enable them to perform their tasks and generate outputs. This might mean access to Gmail for sending communications, the ability to retrieve information from a SharePoint site, or a connection to whatever other systems the agent needs to interact with to complete its work.

To make this concrete, Sara walks through how the market garden DocGen workflow actually functions as an agentic system. The workflow begins with an orchestrator agent serving as the program manager. The input consists of a transcript from a ninety-minute consultation call, possibly along with some notes. This is unstructured data that could go in multiple directions.

The orchestrator agent has comprehensive knowledge of all the different team members available. It knows there's a technical expert who understands soil science and growing conditions. There's a business and marketing expert who can develop viable business models and market positioning. There are other specialists covering different domains relevant to creating a complete market garden business plan.

When the orchestrator receives the unstructured input data, it reads and analyzes the information to understand what the prospective market gardener is trying to accomplish. Based on this understanding, it determines how to distribute information and assign work to the various specialist agents. This distribution is based on each agent's specific domain of expertise and role within the overall workflow.

The specialist agents themselves have very narrow, focused domains. This narrowness is actually a strength because it allows genuine depth. If you tried to build one agent to handle the entire market garden planning process, the output would be superficial and generic. By having specialists, each focused on their particular area, the system can achieve the depth that previously required human experts.

Each specialist agent understands its own specific role. One agent might be exclusively responsible for analyzing soil pH levels in different geographic regions and recommending appropriate crops. Another handles business model development and financial projections. Another focuses on branding and market positioning. They understand their individual responsibilities, but they also have awareness of the overall team structure and the ultimate goal everyone is working toward.

The orchestrator agent manages the distribution of work. When it encounters situations where necessary information is missing, perhaps because Roger didn't ask the right question during the consultation, the orchestrator develops a plan for how each agent should proceed given the constraints.

The system also incorporates loops for quality control. If the orchestrator agent isn't satisfied with a specialist's output, if it doesn't meet the expected standards, the work goes back for refinement. This iterative improvement continues until the output meets the established quality criteria.

The parallels to human team dynamics are striking and intentional. This is exactly the kind of communication that would happen among people named Sara, Tom, and Bob working on a complex project together. If Sara didn't get the information she needed from Bob, she can't complete her part until she gets it. Everyone on the team understands where they fit in the process and what they're collectively trying to achieve. The agentic team replicates these coordination patterns in a way that allows work to proceed systematically toward a defined goal.

The end result is a highly specialized document, in this case, a thirty-five-page market garden business plan, customized to the specific person's location, budget, branding preferences, and all the other variables that make their situation unique. This output represents the culmination of all the specialist agents contributing their expertise in a coordinated workflow.

#4: Establish Human-in-the-Loop and Evaluation Systems to Ensure Quality

One of the most common concerns people raise about AI agents is quality control. AI systems are known to hallucinate, inventing information that sounds plausible but is actually false. While models are improving constantly, the risk of unreliable output is real and must be addressed systematically.

Sara is emphatic about the importance of maintaining what's called in AI development a human-in-the-loop in the process. In the market garden DocGen example, the system does not automatically send the completed business plan directly to the client. Instead, it sends the document to Roger's team for review. The team examines the output, makes any necessary edits, and ensures everything meets their standards before it goes to the customer. This human checkpoint is essential, especially in the early stages of agent deployment.

The question then becomes whether this human feedback loops back into the system to improve future performance. The answer is yes, through a methodology called evaluations, or evals for short.

Sara explains evaluations through an analogy to hiring and managing team members. When you recruit team members, assign them roles, put them on a team, and give them work to do, you need some way to assess their performance. Evaluations function as the report card that tells each agent how it performed on its tasks.

An evaluation might score an agent's performance on a scale of one to five for specific aspects of its work. More importantly, it explains why the agent received that score. For example, the eval might indicate that an agent scored a three in a particular area because it used terminology that was overly corporate in tone when the client's brand voice is more casual and approachable. Or it might flag that the agent hallucinated certain facts that need to be corrected.

These evaluation criteria become training data that feeds back into the system. The evals help define what good performance looks like. They show what a three out of five looks like versus a five out of five. Each time you conduct evaluations, you strengthen the system's understanding of quality standards. You strengthen the feedback loop so that you're creating a system that genuinely improves over time rather than one that hallucinates repeatedly with no mechanism for correction.

This evaluation process is ongoing. It's not something you do once during initial setup. As the agents run and produce outputs, regular evaluation ensures they maintain quality standards, identifies drift where performance gradually degrades, and catches new types of errors that emerge as the system encounters new situations.

The combination of human-in-the-loop review and systematic evaluations creates multiple layers of quality control. The human checkpoint prevents bad outputs from reaching end users. The evaluation system ensures that the agents learn from any mistakes and progressively improve their performance. Together, these mechanisms address the valid concerns people have about AI reliability while allowing businesses to benefit from the dramatic efficiency gains agents provide.

Sara acknowledges that because agent-building tools have become so accessible, there's a misconception that the work is easy. Anyone can now drag and drop to build an agent, but there's substantial work involved in ensuring those agents actually function properly. This distinction becomes even more critical as businesses move into the agentic era and begin giving these tools genuine autonomy.

Agents require ongoing maintenance. Models change as providers release updates. Business procedures evolve over time. Something called prompt drift can occur, where the quality of outputs gradually degrades for various reasons. Large language models themselves are frequently updated with new capabilities and behaviors. All of this means that AI agents aren't a set-it-and-forget-it solution.

The maintenance requirements include monitoring performance through evaluations, updating prompts and instructions as needed, swapping in new or better models when they become available, and adjusting agent behaviors as business needs change. In the hype around AI agents, these operational realities often get lost. Agents are incredibly powerful tools when built properly, but there is genuine work required to make them function the way you need them to for your specific business context.

The businesses that will succeed with AI agents are those that approach implementation methodically, following proven processes rather than rushing to deploy autonomous systems without a proper foundation. They build a deep understanding of their workflows first. They create mock-ups and test extensively. They evolve assistants gradually into agents with increasing autonomy. They maintain human oversight while implementing systematic quality control through evaluations. They recognize that agents require ongoing maintenance and improvement.

This measured, evolutionary approach may seem slower than the revolutionary promises often made about AI. But it's the approach that produces agents that actually work reliably in real business environments, deliver genuine value, and avoid the pitfalls that have given some AI implementations a reputation for chaos and unreliability. For businesses willing to invest in doing it right, AI agents represent a genuine opportunity to scale expertise, capture competitive advantage through customization, and accomplish things that simply weren't possible before.

Sara Davison is an agentic AI practitioner specializing in AI agent deployment to scale businesses. She is the co-founder of AI Build Lab Accelerator and leads Maivenly, an agency focused on business transformation through AI implementation. Follow her on LinkedIn.

Other Notes From This Episode

- Connect with Michael Stelzner @Stelzner on Facebook and @Mike_Stelzner on X.

- Watch this interview and other exclusive content from Social Media Examiner on YouTube.

Listen to the Podcast Now

This article is sourced from the AI Explored podcast. Listen or subscribe below.

Where to subscribe: Apple Podcasts | Spotify | YouTube Music | YouTube | Amazon Music | RSS

✋🏽 If you enjoyed this episode of the AI Explored podcast, please head over to Apple Podcasts, leave a rating, write a review, and subscribe.

Stay Up-to-Date: Get New Marketing Articles Delivered to You!

Don't miss out on upcoming social media marketing insights and strategies! Sign up to receive notifications when we publish new articles on Social Media Examiner. Our expertly crafted content will help you stay ahead of the curve and drive results for your business. Click the link below to sign up now and receive our annual report!

AI Is Transforming Marketing—Are You Keeping Up?

Marketers are rapidly adopting AI to transform their work. Our new 2025 AI Marketing Industry Report surveyed over 730 marketers to reveal the tools, tactics, and trends shaping the industry, including

🔥 90% of marketers save time with AI—discover the top use cases

🔥 The 5 biggest challenges marketers face with AI and how to overcome them

GET THE AI MARKETING INDUSTRY REPORT